AxioMagic under Spark on cloud (AWS, Azure, ...)

AxioMagic under Spark on ${cloud }

AxioMagic relies on functional composition over immutable inputs, thus matching modern evaluation frameworks like Apache Spark, which defines a structured approach to managing data contexts of functional evaluation over data frames. Spark embodies a refinement of both ETL and MapReduce methodology. State machines like Spark require computation and storage resources which may come from any cluster, but today are commonly provided from cloud environments, yielding high volume functional computation at predictable usage-based costs.

We especially value Spark for open scientific dataset analysis, where it is common to work with large data files in interchange formats.

Essential features of Spark distributed evaluation

Spark assembles batch pipelines to pump datasets of structured records through functional tasks (which may invoke side-effects, with all necessary stipulations). This pipeline serves to evaluate a given distributed functional algorithm for query or ETL.

The Spark application boundary is a SparkSession execution context and API

- Launches appropriately for given data science roles

- Runs compactly in development as desktop standalone pipeline

- Deploys to scalable cluster/cloud for production

- Distributes tasks using a common JVM codebase and shared immutable parameter data (broadcast vars)

- Job authoring may use a combination of chain-style coding in Scala, Python, R, Java, and also make use of interactive composition tools like Amazon SageMaker

- Streaming of runtime input may be done via micro-batches, which is orderly at least. When micro-batching is insufficiently granular, consider alternative pipelines, e.g. Kafka.

- Each task feeds records into pipeline of transforms and actions, producing writes and results

- Task result frames are efficiently (but expensively) shuffled to downstream tasks

- Tasks may append to global output accumulator variables

- Task batches may be forcibly synchronized at barriers

- Record data schemas may be enforced at both compile time and run-time.

- Actual schema enforcement depends on Spark job author's intention, flowing from enterprise models and governance policies.

Evaluating AxioMagic functions under Spark

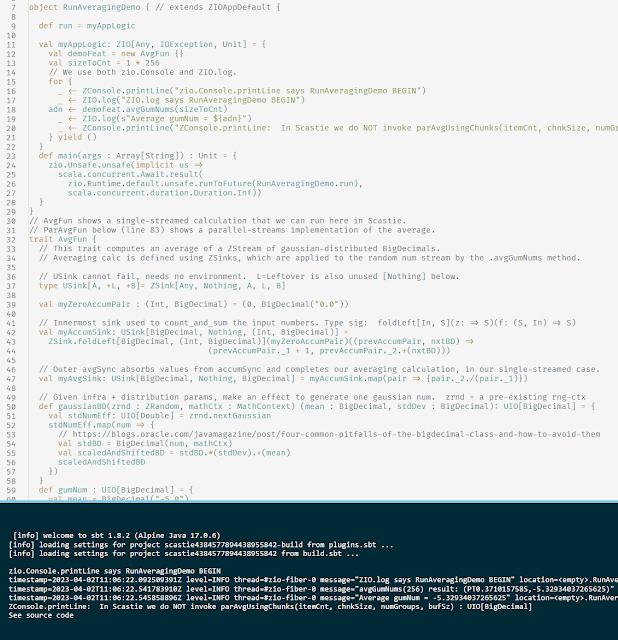

In 2019, we added a Spark batching POC to AxioMagic demo project axiomagic.dmo.hvol, using Spark 2.4.0. This integration is tested with a desktop standalone launch to show classpath compatibility of spark-core and spark-sql with other AxioMagic input dependencies.

Spark on AWS cloud EMR

For commercial scale-up of AxioMagic data transform features, AWS EMR is a reasonable approach for many enterprises. In-house team maintains spark-compatible codebase, testable on desktops, while offloading production analytics hosting burdens to AWS. This duality ensures viable escape pathways to platforms outside AWS (including self-hosted lakehouses over files!), and also to differently shaped services within AWS.

Spark on EMR tasks - contrasted with AWS Lambda functions

Fun article from 2019 by Spark champion Bartosz Konieczny: AWS Lambda - does it fit in with data processing?

Spark on Azure HDInsight

Microsoft's Azure HDInsight runs "Apache Hadoop, Spark, Hive, Kafka, and more."